How can businesses get better insights from unstructured Data by migrating from legacy systems? Graeme King, GTM Lead for Data Strategy and Advisory, talks us through the process.

The way the world generates Data has changed. As individuals digitize more aspects of their lives than ever before – from social media profiles to wearable fitness tech, IoT kitchenware to mobile banking – we’re creating Data in phenomenal volumes, at break neck speed. It’s estimated that 2.5 quintillion bytes of Data is produced every day, with 74 zettabytes of Data expected to be created by the end of this year.

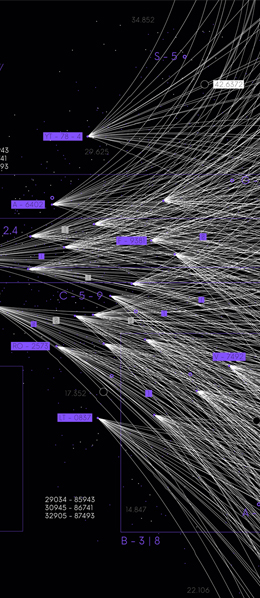

Most of this Data – around 90% – is unstructured. As a result, the vast majority of Data no longer fits into ‘traditional’ or structured Data definitions: neat, clearly defined and readable fields and values, that can be stored in a relational database management system (RDBMS). We’ve moved away from Data that fits into pre defined Data Models – think excel spreadsheet rows and columns – towards richer, more complex Data Sets that have the potential to give us deeper and more nuanced insights into everything from product origins to customer behaviour and sentiment analysis on Social Media.

Yet while businesses are actively collecting this Data, the technology they use to process, store and analyze it isn’t always up to the task. Legacy systems that were designed to process structured Data aren’t fully compatible with unstructured Data, yet it’s estimated that they still account for 31% of organizations' technology systems worldwide. Sixty one percent of financial firms surveyed by Adobe last year, for instance, cited legacy technology as a factor holding back their marketing and customer experience.

In short, legacy technology is preventing organizations from fully extracting value from their unstructured Data - as well as creating additional strain on the organization. If an organization is dependent on legacy systems to house Big Data, it’s likely that it’s collecting a growing number of physical systems to cope with its growing unstructured Data footprint, resulting in an increased need for management, monitoring and security to support them. The majority have opted – or ended up with – a hybrid of cloud and legacy architecture as a result, adding further strain if the systems are not integrated effectively.

If you want to embrace a Data Driven future, you need to be able to access and analyze most of the Data at your disposal, not just a fraction of it. That means having the ability to understand, process and act on unstructured Data at scale.

What is unstructured Data?

Unstructured Data doesn’t follow the ‘excel spreadsheet’ format of information, which makes it harder to search and analyze. It can comprise of text files, photographs, documents, videos, audio files, social media profiles, survey responses, blog posts, information compiled from wearables, IoT gadgets, sensor Data, website analytics; the list goes on.

To understand the difference between structured and unstructured Data in terms of its usefulness, think of an entry in an old fashion Yellow pages phone book compared to a Facebook profile. One may be easy to search and extract meaning from, but the other gives a much richer representation of the person, sharing more insight than a simple name, address and telephone number. For businesses in today’s competitive digital market, that richer analysis is key to understanding their market, their customer behaviour and the commercial opportunities that are available with Data.

How do legacy systems impact unstructured Data Analysis?

There are three main issues that hold back unstructured Data processing and analysis when combined with legacy systems:

Volume

Managing escalating amounts of unstructured Data in legacy systems just isn’t feasible. With most legacy systems being on premise solutions, they lack the flexibility to cope with an influx of large amounts of Data, requiring manual storage expansion to manage demand. Migration becomes a costly exercise, with Data needing to be moved to new systems as space is filled. This is a drain on time, money and IT resources, for a solution that still can’t deliver the full results you need.

Speed

Legacy systems don’t offer real time Data Processing, and struggle to process Big Data with speed and consistency. For many organizations, this is a cause of frustration, but for those with ‘mission-critical’ Data Operations – such as logistics, travel or medical – it poses a serious risk.

Diversity

Unstructured Data comes in a variety of formats, while legacy systems were designed with a rigid idea of what ‘Data’ should look like. As a result, legacy systems often need to be manually edited to incorporate unstructured Data, and lack the full capability needed to analyze, search and extract these disparate data formats. Adding an image to a excel spreadsheet, for instance, might give you more information on a particular entry, but it won’t allow you to extract insights from those images at scale.

How can businesses modernize their Data systems to incorporate unstructured data?

The answer to incorporating unstructured Data lies in transferring from on premise to cloud architecture to get the storage and processing flexibility you need – but it’s crucial that you choose the right cloud migration strategy for your organization if you want unstructured Data to become a viable commercial asset.

At Agile, we offer a four-step approach to Cloud Migration: identifying your pain points and vision for Data, building a better architecture, phasing delivery with our agile methodology, and supporting your migration after implementation, allowing you to adapt and evolve your Data Strategy as and when you need to.

If legacy systems are preventing you from extracting value from unstructured Data, contact our team to discuss Cloud Migration and integration.